I started implementing an coding agent using Homunculus. My plan was demonstration of framework but I learned a hard lesson: the pure “emergence layer” of homunculus was not a good fit for rigid coding tasks.

The issue was equilibrium.

I had designed a biological, emergent system where agents communicated via signals. But for coding, the system couldn’t figure out when it was done. I tried adding energy-based methods to detect task completion, but the agents couldn’t grasp the idea. I try to solve the issue with endless Claude Code sessions, when I asked the LLM to handle this “equilibrium detection” it treated it like a mathematical optimization problem, thinking of it like gradient descent.

The result? Too many ticks, too much oscillation, and wasted time.

So, I pivoted. I realized that for coding, you can’t rely solely on biological emergence. You need a hybrid approach. I rearranged (currently disabled) Homunculus to work more like how we speculate GPT-5 might work: switching between “high reasoning” modes and “normal” modes.

For a long time, I planned to build a CLI tool similar to Claude Engineer. But then the giants arrived. Claude Code, Codex, and a wave of other AI editors dominated the space almost overnight. They were good. Really good. So, I put my idea on the shelf.

Claude Code quickly became my go-to. I used to think software development had become boring, but having a strong prototyping machine at my fingertips made it fun again. I have years of incomplete ideas stored away, and with this new power, I felt like I could rewrite the whole internet.

But as I leaned harder into these tools, the cracks started to show.

Bottomless Pits

I iterate heavily. At any given time, I might have four or five different projects attached to my workspace. Initially, this wasn’t a problem. Before the subscription packages, I paid for API usage tiers and barely hit the limits.

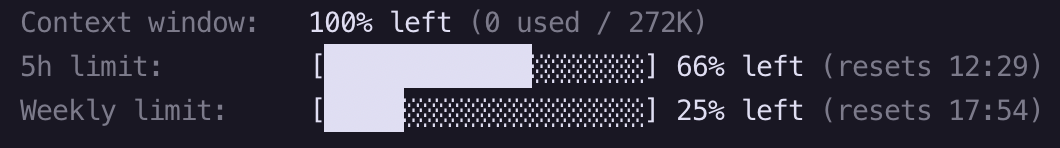

But recently, the walls have been closing in. The 5-hour limits tightened. Weekly caps were introduced. Even with extended plans, hitting sudden walls. I’d watch “Plan Modes” burn thousands of tokens in the background to solve a simple problem, often with very little to show for the price.

Editors like Cursor and Antigravity are amazing, but they are trying to sell you something. They feel like bottomless pits. They keep the price the same but lower the usage cap, which is effectively a price hike. And the worst part? I felt addicted. As a developer, I couldn’t live without them anymore because I knew how much easier they made my life.

I looked for an escape hatch. I tried open-source alternatives like OpenCode, OpenHands, pi, and Mistral Vibe. While I liked them, most still want me providing an API key. They weren’t explicitly designed for a seamless local AI experience; they were often just front-ends for other inference providers. It seems like whenever a project gets popular, a pricing page immediately appears and became a CLI agent for twitter folks. I wanted something different. I wanted to use the spare RTX 5060 sitting in my desktop, not rent a GPU in the cloud.

The Builder’s Perspective

You might ask: Why build this when there are dozens of competitors?

It is no secret that local AI inference will eventually better. New architectures and models are making local hardware incredibly capable. PC builders wants to sell more AI native computers, so economically there will be supply. But beyond the tech, I am building this because I am a builder.

It doesn’t matter if it’s a massive architecture project, a piece of contemporary art, or just cooking a plain egg; everyone has a “secret sauce.” Everybody sees the world differently. People have a unique comprehension (in Turkish, we call this idrak a specific way of perceiving and realizing the truth).

If I asked 10 developers to build an e-commerce app, most would build an Amazon clone. But a few wouldn’t. They wouldn’t follow “best practices” just to please SEO bots; they would develop for people. They would use their own perspective, be themselves, and create a unique medium.

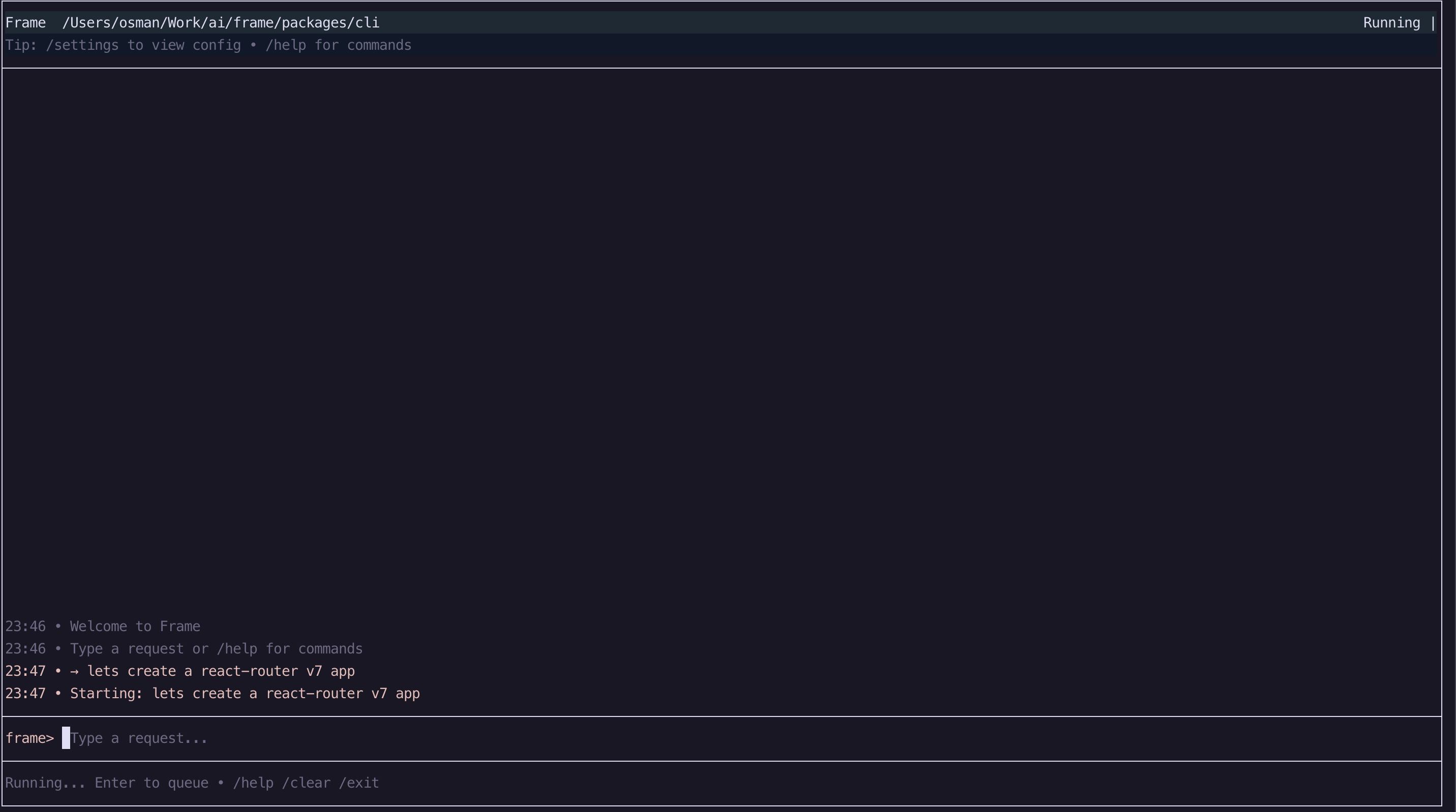

I want to see those builders. I enjoy using products developed by those few people who put their soul into it. Those who do not drive white car. This CLI is my version. It is my understanding of what a coding tool should be.

So, what am I bringing to the table that is different?

There are two types of learning:

- Training: Hard-coded weights, slow to change, expensive.

- Memetics: Shared knowledge, cultural transmission, fast to change.

Big models contain these(mostly) memetics in their training data. They trained on bigger datasets. But my focus is running this on plain Ollama. I want easy install, easy run, on almost every computer.

How can a small local model overcome the knowledge gap?

I am using(kind of) Marvin Minsky’s Frame Theory. Minsky suggested that when we encounter a new situation (e.g., “Entering a Living Room”), we don’t analyze every pixel. We retrieve a Frame a pre-existing data structure that holds our expectations (There should be a floor, a ceiling, maybe a sofa).

I think we can supply these Frames to small local models to fill the gaps. It is basically Copy-Paste Intelligence derived from docs, manuals, and bug reports. (dont say the R word)

I am building a library of these “gap-filling” Frames: a Framebase. My agents don’t need to be geniuses; they just need to go to the Framebase and get the “Remix 3 setup frame” or the “uv install frame.”

I don’t plan to add MCP, complicated planning modes, or complex protocols. I want everything to be ready out of the box. Plain text should be enough for the CLI to get the idea and use the right tools. Project context, some learning layers, tools and sub-agents would be better. I want an opinionated, batteries-included tool.

I am building this to free myself from the subscription cycle. I’m still heavily iterating, add and remove parts. Mostly clean vibe-coding artifacts to make app more reliable. If I can keep up this tempo, I hope to switch to my own tool for mainstream tasks within 6 months.